Abstract

The success of human civilization is rooted in our ability to cooperate by communicating and making joint plans. We study how artificial agents may use communication to better cooperate in Diplomacy, a long-standing AI challenge. We propose negotiation algorithms allowing agents to agree on contracts regarding joint plans, and show they outperform agents lacking this ability. For humans, misleading others about our intentions forms a barrier to cooperation. Diplomacy requires reasoning about our opponents’ future plans, enabling us to study broken commitments between agents and the conditions for honest cooperation. We find that artificial agents face a similar problem as humans: communities of communicating agents are susceptible to peers who deviate from agreements. To defend against this, we show that the inclination to sanction peers who break contracts dramatically reduces the advantage of such deviators. Hence, sanctioning helps foster mostly truthful communication, despite conditions that initially favor deviations from agreements.

Similar content being viewed by others

Introduction

Coordination, cooperation and negotiation play a key role in our everyday lives, from small-scale problems such as safely driving on roads and scheduling meetings to large-scale efforts such as international trade or mediating peace. A key driver to the success of humans as a species is our ability to cooperate with others1,2. Systems based on artificial intelligence (AI) control a growing part of our lives, from personal assistants to high-stake decisions such as authorizing loans or automated job market screening3,4. Cooperation and negotiation are central to AI5,6,7,8,9,10,11, and AI systems already affect human trade and negotiation through algorithmic trading and bidding10,12,13,14,15,16. It is thus imperative that we endow our AI systems with the tools to coordinate and negotiate with others3,7,11,17,18.

Game playing has been a focus area of AI since its inception. Progress on search, reinforcement learning, and game theory9,19,20,21 led to successes in Chess22, Go23, Poker24, control25, and video games26,27. However, the majority of such work deals with two-player games that are fully competitive (zero-sum), which are mathematically easier to analyze18,28, but cannot capture alliance formation and negotiation. Similarly, work on games where agent goals are fully aligned29,30, or two teams of agents engaged in a competition27,31,32, lacks the need to negotiate. In contrast, many real-life domains require negotiation as the goals of participants only partially align. These domains exhibit tensions between cooperation and competition7,33,34,35, making them harder to tackle using AI agents21,36,37. Communication has a key role in such settings as it enables us to share beliefs, goals and intents with others, allowing us to negotiate, form alliances and find mutually beneficial agreements10.

Diplomacy38 is a prominent 7-player board game that focuses on communication, cooperation and negotiation. An introduction to the rules of Diplomacy is given in Supplementary Note 1. Diplomacy is played on a map of Europe partitioned into provinces, some of which are special and marked as Supply Centers. Each player attempts to own the majority of the supply centers, and controls multiple units (armies or fleets). A unit may support another unit (owned by the same or another player), allowing it to overcome resistance by other units. Due to the inter-dependencies between units, players stand to gain by negotiating and coordinating moves with others. Hence, while ultimately a competitive game, making progress in Diplomacy requires teaming up with others. In each round, every player decides on the actions taken by each of its units, and these moves are executed simultaneously. This yields an enormous action space of 1021 to 1064 legal actions per turn, and an immense game tree size of 1090039; for comparison, Chess has fewer than 100 legal actions per turn, with a game tree size of 10123. The heart of Diplomacy is the negotiation phase occurring before entering moves, where players communicate trying to agree on the moves they are about to execute.

These properties make Diplomacy a key AI challenge domain for negotiation and alliance formation in a large-scale realistic mixed-motive setting. AI approaches for Diplomacy have been researched since the 1980s40,41. The standard version is Press Diplomacy, which includes a negotiation phase prior to each move phase. In human games this takes place through conversations in natural language: players may converse privately by stepping into another room, or through a private chat channel when playing online. For AI, researchers have proposed computer-friendly negotiation protocols42, sometimes called Restricted-Press.

A much simpler version is No-Press Diplomacy, where direct communication between players is not allowed, eliminating the negotiation phase. We contrast No-Press and Press Diplomacy in Fig. 1.

Left: In No-Press Diplomacy, players may not directly communicate to coordinate a joint plan. AI agents each select actions on their own. Right: In Press Diplomacy players directly communicate with each other to decide on a joint course of action. Agent algorithms determine how they agree on a joint plan. We express such plans using a contract regarding future actions. The move phase is identical in Press and No-Press Diplomacy, and the next board state depends only on the actions chosen by the players in the move phase. Negotiation in Press Diplomacy only affects how the game progresses if it results in players selecting different actions in the move phase. As players are free to choose any legal action regardless of what they say during the negotiation phase, Press and No-Press Diplomacy are exactly the same game except for the ability of participants to communicate. Background image by rawpixel.com on Freepik.

Many AI approaches for Diplomacy were proposed through the years, mostly relying on hand-crafted protocols and rule-based systems42,43,44,45,46, and falling far behind human performance (both with and without communication). Paquette et al. achieved a breakthrough in game performance47, training a neural network called DipNet to imitate human behavior based on a dataset of human games. While DipNet has defeated previous state-of-the-art agents by a wide margin, it only handles No-Press Diplomacy, employing no communication or negotiation. Recent work improved the performance of such agents using deep reinforcement learning39 or deep regret matching48,49, but still without communication or negotiation. Our Contribution: We leverage Diplomacy as an abstract analog to real-world negotiation, providing methods for AI agents to negotiate and coordinate their moves in complex environments. Using Diplomacy we also investigate the conditions that promote trustworthy communication and teamwork between agents, offering insight into potential risks that emerge from having complex agents that may misrepresent intentions or mislead others regarding their future plans.

We consider No-Press Diplomacy agents trained to imitate human gameplay and improved using reinforcement learning39, and augment them to play Restricted-Press Diplomacy by endowing them with a communication protocol for negotiating a joint plan of action, formalized in terms of binding contracts. Our algorithms agree on contracts by simulating what might occur under possible agreements, and allow agents to win up to 2.5 times more often than the unaugmented baseline agents that cannot communicate with others. Human cooperation is impeded by the potential impact of breaking agreements or misleading others about future plans50,51. We use Diplomacy as a sandbox to study how the ability to break past commitments erodes trust and cooperation, focusing on ways to mitigate this problem and identifying the conditions for honest cooperation. We find that artificial agents face a similar problem as humans regarding breaches of trust: communities of communicating agents are susceptible to peers that may deviate from agreements. When agreements are non-binding, an agent may agree on one course of action in a turn but choose an action violating this agreement in the next turn. We refer to such agents as deviators, and show that even deviators that select their actions using simple algorithms win almost three times as often as peers that never break their contracts. To defend against this, we endow agents with the inclination to retaliate when agreements are violated, and find that this sanctioning behavior dramatically reduces the advantage of deviators. When the majority of the agents sanction peers that break agreements, simple deviators win less frequently than agents that always abide by their contracts.

Finally, we consider how a deviator may optimize its behavior when playing against a population of agents that sanction peers that break agreements, and find that the deviator is best-off adapting its behavior to very rarely break its agreements. Such sanctioning behavior thus helps foster mostly-truthful communication among AI agents, despite conditions that initially favor deviations from agreements. However, sanctioning is not an ironclad defense: the optimized deviator does gain a slight advantage over the sanctioning agents, and sanctioning is costly when peers break agreements, so the population of sanctioning agents is not completely stable under learning.

We view our results as a step towards evolving flexible communication mechanisms in artificial agents, and enabling agents to mix and adapt their strategies to their environment and peers. Our work offers two high-level insights. Firstly, algorithms that reason about the intents of others and employ game-theoretic solutions allow communicating agents to outperform peers through better coordination, even under simple interaction protocols. Secondly, the ability of participants to break from prior agreements is a barrier hindering cooperation between AI agents; however, even in complex environments, simple principles such as negatively responding to the breach of agreements help promote truthful communication and cooperation.

Results

Negotiating with binding agreements

Our proposed method takes non-communicating agents trained for No-Press Diplomacy, and augments them with mechanisms for negotiating a joint plan of action with peers. To obtain the initial non-communicating agents we use existing reinforcement learning agents39, discussed in the section “The sampled best responses procedure”. This method constructs neural networks capturing a policy which maps the board state to the game action to be taken, and a value network which predicts the win probabilities of the agents. We provide these agents with a protocol for negotiating bilateral agreements regarding the actions they would take.

We first consider binding agreements, where agents who agree on a joint plan cannot deviate from it later. We view a contract as a restriction over the actions each of the agents may take in the future. For simplicity we focus only on contracts relating to the upcoming timestep. Given two agents pi, pj who must act in a state s, we denote by Ai(s), Aj(s) the respective sets of actions the agents may take. A potential contract D is a pair D = (Ri,Rj) where Ri ⊆ Ai is the restricted subset of actions each side may take under the contract; an action a ∈ Ai⧹Ri is one that violates the contract D. One type of contract D = (Ri,Rj) is where Ri consists of a single action: Ri = {ai} where ai ∈ Ai(s), meaning that agent pi commits to taking the action ai next turn. Two contracts \({D}^{1}=({R}_{i}^{1},{R}_{j}^{1}),{D}^{2}=({R}_{i}^{2},{R}_{j}^{2})\) are identical if \({R}_{i}^{1}={R}_{i}^{2}\) and \({R}_{j}^{1}={R}_{j}^{2}\), denoted as D1 = D2. Figure 2 illustrates these concepts.

Left: a part of the Diplomacy board with three players. Middle: a restriction allowing only certain actions RRed to be taken by the Red player; in this example, the unit in Ruhr may not move to Burgundy, while the unit in Piedmont must move to Marseilles. The set RRed consists only of the actions that fulfill these restrictions. Right: a contract D = (Rred,RGreen) consists of a restriction for both players; in this example, Red’s actions are restricted as in the middle, while Green is restricted to actions where the unit in Brest moves to Gascony. Background image by kjpargeter on Freepik.

Protocols

A protocol is a set of negotiation actions through which agents may communicate and agree on contracts. We consider a set of n agents P = {p1,…,pn} about to take simultaneous actions. We consider two general protocols for reaching pairwise agreements regarding future actions. The Mutual Proposal protocol places restrictions on the actions both sides may take. The Propose-Choose protocol enables both sides to agree on each taking a specific move.

Mutual Proposal protocol

Under this protocol every pair of agents pi, pj ∈ P has only a single possible contract between them, depending on the state; we call the specification of this the contract type. Each agent pi ∈ P may propose, to each of the remaining agents, to enter into the contract specified by the contract type. We denote the proposal of agent pi to pj as Di→j: if pi does not propose to enter into a contract with pj, this is denoted as \({D}^{i\to j}={{\emptyset}}\). Two agents agree to a contract if and only if they both propose the contract to one another so \({D}^{i\to j}={D}^{j\to i}\,\ne\, {{\emptyset}}\). If either side does not make an offer, i.e., \({D}^{i\to j}={{\emptyset}}\) or \({D}^{j\to i}={{\emptyset}}\), no agreement is reached, and neither side is restricted in its actions. For Diplomacy we use a Peace contract type. An action by agent pi violates the peace with agent pj if one of the pi’s units attempts to move into a province occupied by pj or enter or hold a supply center owned by pj, or to assist another unit to do so. The Peace Contract D = (Ri,Rj) between pi and pj is defined by Ri and Rj containing only the actions that do not violate the peace between pi and pj.

Propose-Choose protocol

This protocol consists of two stages: a Propose phase where each agent may propose a single contract to each other agent, and a Choose phase where each agent selects one of the contracts involving them (one they proposed, or one proposed to them). We refer to contracts proposed in the first phase as contracts On The Table. We denote the contract that pi proposes to pj as Di→j. We say a contract D involves agent pi if D is either a contract D = Di→j that pi proposed to another agent pj or if D is a contract D = Dj→i that some other agent pj proposed to pi. In the choose phase, each agent pi may choose only one contract D that involves them out of all the contracts on the table. Denote the contract chosen by pi as \({D}_{i}^{*}\). In this protocol, two agents only reach an agreement if they choose exactly the same contract, i.e., \({D}_{i}^{*}={D}_{j}^{*}\) (e.g., both pi and pj choose Di→j or both choose Dj→i). In our experiments we enhance the protocol slightly, allowing each player pi in the Choose phase to either only choose a contract Di→j or Dj→i for some pj, or to optionally also indicate that they are willing to accept either of Di→j, Dj→i; when both pi, pj indicate that they are willing to accept both Di→j, Dj→i but rank these two contracts in a different order, we select one of the two contracts randomly. We use the Propose-Choose protocol with contracts that completely specify what each unit of each side would do the next turn, i.e., a contract D = ({ai},{aj}). Each agent could potentially propose n − 1 contracts and could potentially receive n − 1 contracts, so an agent pi who wishes to reach an agreement with pj is competing with the other agents to get pj to choose their contract. If an agent proposes a contract that is mostly beneficial to itself, the other agent is unlikely to choose that contract. Hence, agents must reason about which contracts others are likely to accept.

Overview of the Negotiation Algorithms

We summarize the negotiation algorithms, with full details in the “Methods” section. We propose a method called Restriction Simulation Sampling (RSS) for the Mutual Proposal protocol, and a method called Mutually Beneficial Deal Sampling (MBDS) for Propose-Choose. We evaluate these on Diplomacy using Peace contracts for RSS and contracts fully specifying the next turn moves for MBDS, but our methods generalize to other contract types (see Supplementary Note 6).

Our methods identify mutually beneficial deals by simulating how the game might unfold under various contracts. We use the Nash Bargaining Solution (NBS) from game theory52 as a principled foundation for identifying high quality agreements. NBS fulfills appealing negotiation and fairness axioms53 and takes into account the utility of the agents when no deal is reached, called the no-deal baseline or BATNA—Best Alternative To a Negotiated Agreement54. Intuitively, NBS strikes a balance between the benefits that either side obtains. We justify the use of the NBS in the “Mutually beneficial deal sampling (MBDS)” section.

Unfortunately, directly calculating expected utilities through game simulation and finding the optimal NBS contract are computationally intractable: the game may unfold in many ways as players may take many possible actions, and there may be a vast space of potential contracts to search through. We address these difficulties through a Monte-Carlo simulation, sampling from the space of potential future states using policy and value functions, which are common machinery in the AI literature55. Multiple approaches have been proposed for constructing policy and value functions for Diplomacy, such as imitation learning47 or regret minimization48; we use functions trained via reinforcement learning39. Following this training, these functions are held fixed throughout the experiments (colloquially, we freeze the learning). Our methods simulate what might occur in the next turn by sampling from various policies, such as the unconstrained policy of the underlying No-Press Diplomacy agent, or a constrained policy that agent pi must follow after agreeing to a contract D = (Ri,Rj). This approach is illustrated in Fig. 3.

Left: current state in a part of the board, and a contract D = (Ri,Rj) agreed between the Red and Green players (same as in Fig. 2). Right: multiple possible next states; the actions of Red and Green are sampled from the restricted policies \({\pi }^{{R}_{i}},{\pi }^{{R}_{j}}\) allowing only certain actions as specified by the contract, and the actions of the Blue player sampled from the unrestricted policy. Background image by rawpixel.com and kjpargeter on Freepik.

Negotiators outperform non-communicating agents

We show that augmenting agents with our negotiation mechanism allows them to outperform baseline non-communicating agents lacking this mechanism. Both the negotiators and the baseline use the same policy function obtained using reinforcement learning39, and select actions from it using the same algorithm (the Sampled Best Response procedure39 described in the “The sampled best responses procedure”) section. However, only the negotiators are able to communicate: negotiators interact via the RSS method in the Mutual Proposal protocol, or via MBDS in the Propose-Choose protocol (see the “Baseline Negotiator algorithms” section). Diplomacy has seven players, so we consider k communicating agents and 7 − k non-communicators for k ∈ {1,2,…,6}. Figure 4 shows the advantage our communicating agents have over the non-communicating baseline: communicators win up to 2.49 times more often than non-communicators (or 56% more often for the Mutual-Proposal protocol), highlighting the advantage agents gain through cooperation.

Left: Mutual Proposal protocol. Right: Propose-Choose protocol. The x axis is the number of communicating agents, and the y axis is the winrate advantage of the communicating agent over the baseline, expressed as a ratio \(\frac{{W}_{C}}{{W}_{N}}\), where WC is the average winrate of the communicating agents and WN of the non-communicating agents, measured over 10,000 games. The advantage each communicating agent enjoys grows as there are more communicating peers to make agreements with. The Propose-Choose protocol, where agents agree on an exact fully specified joint action next turn, results in a larger advantage for communicator agents over the non-communicating baseline (though a contract type other than Peace could potentially allow for stronger agent performance in the Mutual-Proposal protocol). Note that for Propose-Choose, the communicating agents exclude the non-communicating agents from consideration to prevent themselves from wastefully choosing contracts that cannot be agreed on.

Negotiating with non-binding agreements

A key limitation of our results in the “Negotiating with binding agreements” section is the implicit assumption that agreements are binding—once an agent has agreed to a contract, they do not deviate from it. We now lift this assumption, and consider agents who may agree to a contract in one turn and deviate from it the next. This serves multiple purposes. First, a key feature of the rules of Diplomacy is that agreements made during the negotiation phase are not binding38 (i.e., communication is cheap talk56). Much more importantly, in many real-life settings we can also not assume that agreements are binding—people may agree to act in a certain way, then fail to meet their commitments later on. To enable cooperation between AI agents, or between agents and humans, we must examine the potential pitfall of agents strategically deviating from agreements, and ways to remedy this problem. Diplomacy is a board game that can serve as a sandbox, i.e., an abstract analog to real world domains, enabling us to explore this topic.

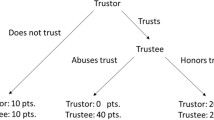

Our results on deviation from contracts consider multiple types of communicating agents, shown in Fig. 5 (in contrast to No-Press Diplomacy agents47,48,49 such as the non-communicating baseline39). We call the agents of the section “Negotiating with binding agreements” Baseline Negotiators as they operate assuming agreements are binding. We consider Deviator Agents which overcome Baseline Negotiators by deviating from agreed contracts. The “Baseline negotiatiors are defeated by deviators who break contracts” section discusses Simple Deviators and Conditional Deviators, and shows they outperform the Baseline Negotiators. The “Mitigating the deviation problem: defensive agents” section considers Defensive Agents, such as the Binary Negotiators and Sanctioning Agents, which deter Deviators from breaking contracts while retaining the advantages stemming from communication. It also describes the Learned Deviator, a Deviator optimized against a population of Defensive Agents, showing that it learns to rarely break its contracts. The full algorithms appear in the “Methods” section). Our Defensive Agents reproduce human behaviors of ceasing to trust peers who break promises or sanctioning such deviations, inspired by work on the Evolution of Cooperation57 (see comparison in Supplementary Note 7, as well as a discussion of differences between Diplomacy and repeated games). We examine the conditions for honest cooperation in the large-scale temporally extended setting of Diplomacy, complementing work on cooperation in repeated games, where multiple players repeatedly interact by playing an identical simple stage game with one another.

Baseline negotiatiors are defeated by deviators who break contracts

We first show the advantage that Deviator Agents obtain by breaking contracts. Simple Deviators behave as if no contract was accepted even when agreements are reached. When a contract D = (Ri,Rj) is reached, a Baseline Negotiator pi only selects actions in Ri, which do not violate the contract. In contrast, the Simple Deviator forgets the contract, and always samples actions from the unconstrained policy (possibly selecting ones that violate the contract). Conditional Deviators are more sophisticated, and optimize their actions assuming that peers who accepted a contract would act according to it. Similarly to Simple Deviators, when no deal has been agreed, a Conditional Deviator pi selects actions from the unconstrained policy. However, when a deal D = (Ri,Rj) is agreed on with another player pj, the Conditional Deviator considers multiple actions it might take (these are sampled from its policy, and likely include candidate actions a ∉ Ri that violate the contract). For each such action, it performs multiple simulations of the next turn, by sampling actions for pj from the policy constrained by the contract, reflecting the assumption that pj would honor the agreement and thus refrain from taking an action a ∉ Rj. The Conditional Deviator uses its average value in the sample to estimate the expected next turn utility for each candidate action, selecting the action that maximizes this expected value. The Deviator agents are fully described in the section “Deviator Agent algorithms”.

We evaluate the relative performance of Baseline Negotiators and Deviators. Figure 6 shows the winrate ratio in games with k Deviator agents playing against 7−k Baseline Negotiators, for k ∈ {1,2,…,6}. Figure 6 shows that even the Simple Deviator significantly outperforms the Baseline Negotiator, and that the Conditional Deviator overwhelmingly outperforms Baseline Negotiators (winning twice or three times more frequently).

Left: Mutual Proposal protocol. Right: Propose-Choose protocol. The x axis is the number of Deviator agents, and the y axis is the winrate advantage of the deviator agent over the Baseline Negotiators, expressed as a ratio \(\frac{{W}_{D}}{{W}_{C}}\), where WD is the average winrate of the Deviator agents and WC of the Baseline Negotiator agents, measured over 10,000 games.

Mitigating the deviation problem: defensive agents

Communication allows Baseline Negotiators to outperform non-communicators, but the section “Baseline negotiatiors are defeated by deviators who break contracts” shows they are vulnerable to Deviators who gain the upper hand by breaking contracts. We overcome this problem using Defensive Agents that respond adversely to deviations. We consider Binary Negotiators that cease to communicate with agents who have deviated, and Sanctioning Agents who modify their goal to actively attempt to lower the deviator’s value. We also consider Learned Deviators, which learn when to activate the deviator behavior mode (behaving identically to Baseline Negotiators until that moment); these agents learn to break agreements very rarely, making the vast majority of communication in the game truthful.

Defensive Agents deter deviations by negatively responding to them. Defensive agents initially act identically to the Baseline Negotiators, but when a defensive agent pi accepts a deal D = (Ri,Rj) with a counterpart agent pj, they examine the move that pj executes the next turn. If pj deviates from the agreement (i.e., takes an action aj ∉ Rj), they modify their behavior for the remainder of the game. We consider two responses to deviations: Binary Negotiators and Sanctioning Agents.

Binary Negotiators respond to a peer pj who deviates from an agreement by ignoring any communication from them until the end of the game. Following a deviation, a Binary Negotiator stops making any proposals to the deviator, and declines all proposals from the deviator (colloquially, they cease to trust the deviator).

A Sanctioning Agent pi responds to a deviation by a peer pj by selecting actions so as to lower the deviator pj’s reward. A Baseline Negotiator pi evaluates actions sampled from the policy π by performing simulations yielding possible future states \(s^{\prime}\), ranking actions by the expected future value \({V}_{i}(s^{\prime} )\). A Sanctioning Agent considers both its own value \({V}_{i}(s^{\prime} )\) and the deviator’s value \({V}_{j}(s^{\prime} )\) to rank actions. It maximizes the metric \({V}_{i}(s^{\prime} )-\alpha {V}_{j}(s^{\prime} )\), which consists of both improving its own probability of winning \({V}_{i}(s^{\prime} )\) and lowering pj’s probability of winning \({V}_{j}(s^{\prime} )\). The parameter α ≥ 0 controls the relative importance placed on the two goals, with α = 0 reflecting no sanctioning (equivalent to Baseline Negotiators) and α = 1 reflecting equal importance on lowering the Deviator’s utility. As α → ∞, the Sanctioning Agent focuses solely on making the deviator lose the game, without regard to its own odds of winning the game (we use α = 1, see Supplementary Note 10). Note that Sanctioning Agents themselves do not deviate from their contracts.

Sanctioning dramatically reduces the advantage of deviation

We evaluate how a population of Defensive agents performs against Deviator agents. We consider games with k Deviators playing against 7 − k Defensive agents, for k ∈ {1,2,…,6}, presented in Fig. 7. The figure shows that Binary Negotiators either outperform Deviators or least significantly reduce their advantage, and that Sanctioning Agents offer an even stronger defense, significantly outperforming deviators when the majority of the players are Sanctioning Agents. Defensive agents behave identically to Baseline Negotiators when playing with Baseline Negotiators, thus retaining the advantages of communication.

Left: Mutual Proposal protocol. Right: Propose-Choose protocol. We consider multiple games, each with agents of exactly two types: either k Deviators and 7 − k Baseline Negotiators, or k Deviators and 7 − k Binary Negotiators, or k Deviators and 7 − k Sanctioning Agents. The y-axis is the ratio \(\frac{{W}_{dev}}{{W}_{def}}\) between the average winrate Wdev of a Conditional Deviator and the average winrate Wdef of a Defensive agent (so values lower than 1 indicate a Defensive agent outperforms a Deviator agent). In both protocols, a population of Binary Negotiators significantly lowers the advantage of Deviators, as compared to a population of Baseline Negotiators. While Deviators may still win more often than Binary Negotiators when there are few Binary Negotiators, the gap is much smaller than the one arising with Baseline Negotiators. Further, Sanctioning Agents offer a much stronger defense against Deviators. When there are more Sanctioning agents than Deviators, a Deviator wins the game much less frequently than a Sanctioning agent.

Hence, negatively responding to broken contracts allows agents to benefit from increased cooperation while resisting deviations. However, Deviators may adapt their behavior trying to render this defense less effective. For instance, a Simple or Conditional Deviator attempts to exploit others at every turn, selecting actions violating agreed contracts whenever they deem that doing so offers even a slight advantage, triggering the adverse response of Defensive agents early in the game. Hence, we consider Learned Deviators, whose parameters are optimized to best decide when to deviate in games against a population of Sanctioning agents.

A Learned Deviator pi considers two features of the current state s and a contract D = (Ri,Rj) agreed to with another player pj: the approximate immediate deviation gain ϕi(s) reflecting the immediate improvement in value pi can gain by deviating from D, and the remaining opponent strength ψi(s) reflecting the ability of the other agents to retaliate against pi should pi deviate from a contract. Given the state and the contracts agreed to by pi, both of these features can be computed using the value and policy functions.

A Learned Deviator waits until a turn where the immediate gains from deviation are high enough and the ability of the other agent to retaliate is low enough, and only then deviates from an agreement. Such agents are parametrized by two thresholds tϕ, tψ. They behave as a Baseline Negotiator, respecting all agreed contracts, until the first turn where the state s is one such that ϕ(s) > tϕ and ψ(s) < tψ. Then, they switch their behavior to that of the Conditional Deviator. Any two thresholds tϕ, tψ result in different Deviator behavior and winrate against a population of 6 Sanctioning agents. Full details regarding how the features ϕ(s), ψ(s) are computed and tuned to maximize the winrate against Sanctioning agents are given in the section “Learned Deviator”. Figure 8 shows the winrate advantage of the Deviator for several choices of the two thresholds.

We call this the Deviator advantage. The cells are arranged along a horizontal tψ axis and a vertical tϕ axis. The color of each cell indicates the Deviator advantage: blue indicates the advantage is >1 and, i.e., the Learned Deviator outperforms the Sanctioning Agents, and red indicates the opposite. The colors of the top and bottom circles in each cell indicate the endpoints of a 95% confidence interval. These are evaluated with halved hyperparameters M and N relative to the earlier results. Left: Mutual Proposal protocol. Right: Propose-Choose protocol. Each cell is the result of over 40,000 games (with one Deviator using the given parameters, and 6 Sanctioning agents).

Even under the strongest parameter settings for the Learned Deviator, it only has a slight advantage, winning 1.7% more often than the Sanctioning agents with the Mutual Proposal protocol (1.0% for Propose-Choose). The average turn in which the Learned Deviator indeed deviates from an agreed contract is 82 (respectively, 93), while the average number of turns in games where deviations occurred is 101 (respectively, 110 for Propose-Choose). This indicates that the Learned Deviator adapts to break contracts quite late in the game. In the few games where the Learned Deviator does break its agreements, it often wins: 53.1% of the time for Mutual Proposal and 52.6% for Propose-Choose (see Supplementary Note 9 for further behavioral analysis).

Overall across games, the Learned Deviator honors 99.8% (or 99.7% for Propose-Choose) of the contracts it had agreed to; by optimizing its behavior against a population of Sanctioning agents it adapts to honor the vast majority of its contracts, making the communication in these games almost entirely truthful.

Discussion

We consider mechanisms enabling agents to negotiate alliances and joint plans. Similarly to recent work25,27,31,58, we consider agents working in teams, each trying to counter the strategies of other teams. We take agents trained using reinforcement learning and augment them with a protocol for agreeing future moves. Our agents identify mutually beneficial deals by simulating future game states under possible contracts.

In terms of broader impact, Diplomacy38 is a decades-long AI challenge, and we hope that this work will inspire future research on problems of cooperation. Diplomacy makes an exceptional testbed: it has simple rules but high emergent complexity, and an immense action space40,43 which has recently been tackled using deep learning39,47,48 (future work could of course uncover other successful approaches). Communication adds another important layer of complexity. Game theory offers powerful tools for analyzing games such as Diplomacy and for constructing agents. In particular, solving for a subgame perfect equilibrium or applying repeated game analysis7 could be useful for building capable agents. However, the large action space in Diplomacy results in an incredibly large game tree size, which impedes game theoretic analysis (see Supplementary Note 8). We thus combine reinforcement learning methods with game theoretic solutions (the Nash Bargaining Solution52), and address computational barriers using a Monte-Carlo simulation of potential future game trajectories.

Our results highlight the difficulty in establishing cooperation due to deviation from agreements. For humans, the potential to break promises or mislead others hinders cooperation, requiring people to decide whether they can trust others50,51. Similarly for AI agents in Diplomacy, when agreements are non-binding, an agent may promise to act one way during negotiation but act in a different way later. Our objective was to understand the conditions that foster truthful cooperation between artificial agents, and our results offer insight into some of the risks that arise with complex agents that can misrepresent intentions. We find that similarly to humans, cooperation is jeopardized by the ability of agents to break prior agreements. The “Baseline negotiatiors are defeated by deviators who break contracts” section shows that while Baseline Negotiators outperform silent agents due to increased cooperation, they are susceptible to peers who make false promises regarding future actions. We mitigate this risk by endowing agents with the inclination to retaliate against such deviations.

Strong Diplomacy AI gameplay may differ from human play59 and different people may have different expectations regarding the behavior of artificial Diplomacy agents60. Recent methods recover human-like play through regularization61. We have not incorporated such regularization: after the policy network is learned from imitating human play, further training is based entirely on reinforcement learning.

Humans playing Diplomacy sometimes employ a strategy of keeping promises until a crucial deviation late in the game62. It’s striking that our Learned Deviators follow a similar strategy: they adapt to typically follow through on their promises for long periods of time, breaking agreements only when the gains are high enough and when their opponents are left less capable of retaliating for the deviation. We strived to create a rich representation of the Diplomacy game, recreating its dilemmas relating to strategic communication. One possible cause for the strategic similarity is that Diplomacy was designed such that deviating from agreements can be beneficial when the opponent would find it difficult to respond. In other words, Diplomacy, even under the restricted communication protocols we considered, has a strategy space where such a behavior can be an effective way to win, so both humans and our Learned Deviator exhibit it.

The “Sanctioning dramatically reduces the advantage of deviation” section shows that when a population of agents are inclined to sanction broken agreements, they are resistant to deviators; when the proportion of retaliating agents is high enough, constant deviations from agreements are not beneficial as the long term negative consequences of the retaliation outweigh the immediate gains from the deviation. Even Learned Deviators, who optimize their choice of when to deviate against Defensive agents, rarely deviate from agreements. Hence, sanctioning peers who break contracts can foster more truthful communication among AI agents.

Learned Deviators do gain a slight advantage against Defensive agents, and when many agents deviate from agreements sanctioning deviations becomes more costly. Hence, Sanctioning agents may themselves start deviating from agreements, and if the sanctioning behavior proves to be costly, the Sanctioning agents may cease sanctioning deviators. In Supplementary Note 11 we probe the potential learning dynamics of an agent population with regards to sanctioning and deviation: we consider Sanctioning agents who themselves deviate from agreements, and the incentive to cease sanctioning peers when this sanctioning behavior is costly. We find that a population of sanctioning agents is only at a near-equilibrium (in contrast to simple repeated games such as Iterated Prisoner’s Dilemma which may admit a fully stable cooperative equilibrium57).

Such issues have been studied in the literature on the evolution of cooperation, and for simpler games researchers noted that additional mechanisms might be required to support sustained cooperation, such as having repeated interaction. Similarly for Diplomacy, to avoid a population of learning agents gradually becoming less cooperative one might need to employ additional mechanisms. Repeating the interaction and playing multiple games of Diplomacy against the same opponents could increase the incentives for cooperative behavior. Further, we focused on sanctioning, but humans rely on diverse solutions to deter deviating from agreements such as employing trust and reputation systems63,64, or relying on a judicial system to provide remedies for the breach of contracts65. Future work could investigate how to implement such mechanisms for AI agents, similarly to earlier work on deterring deviations in dynamic coalition formation settings, for instance keeping track of peer behavior over multiple interactions or leveraging indirect reciprocity and tagging66,67,68.

One limitation of our methods is that we took initial agents trained for No-Press Diplomacy, then augmented them with negotiation algorithms. This means that the action strategy, coming from the base agent, was designed separately from the communication and negotiation strategies. Future work might build stronger agents through better methods for co-evolving the action and communication strategies, allowing the agent’s play to capture even more of the strategic richness that arises in human play. Our work has further limitations (discussed in depth in Supplementary Note 3), such as assuming a known deterministic model of the environment, holding the policy and value functions fixed following the initial reinforcement learning step, and using simple communication protocols rather than more elaborate ones or natural language. Further, many questions remain open for future research. Could one design more intricate protocols, considering more than two agents, or communicating knowledge or goals? How could one handle imperfect information? Finally, what other mechanisms could deter deviations from agreements?

Methods

We present the algorithms for the agents of the sections “Negotiating with binding agreements” (Baseline Negotiator) and “Negotiating with non-binding agreements” (Simple, Conditional and Learned Deviators, Binary Negotiators and Sanctioning Agents).

Simulation building blocks

Our methods use policies πi: S × Ai → [0,1] mapping any game state to a distribution over actions, with the probability of taking action a ∈ Ai in state s denoted as πi(s,a), and a state-value function \(V:S\to {{\mathbb{R}}}^{n}\) which maps any state to the expected reward of all the agents (for Diplomacy this can be viewed as estimated win probabilities). We use π to refer to policies πi for each player pi. Various methods were proposed for constructing policy and value functions in Diplomacy47,48,49. Our experiments in “Results” use policy and value networks trained by first imitating human gameplay on a dataset of human Diplomacy games, then applying reinforcement learning to improve agent policies39 (available at https://github.com/deepmind/diplomacy). Hence, the value and policy functions we use are based on both learning from human data and applying reinforcement learning, but our algorithms can work with any such functions. We denote sampling an action a in state s for agent pi from the policy πi as a ~ πi(s). Given a contract D = (Ri,Rj) we can restrict the policies πi, πj to respect the limitations expressed in the contract, yielding a policy \({\pi }_{i}^{{R}_{i}}\) (also written as \({\pi }_{i}^{D}\)) for pi where the probability of pi taking action a in state s is:

Note that given a neural network capturing a policy πi and a restricted action set Ri (resulting from pi agreeing to a contract D = (Ri,Rj)), one can sample an action ai from \({\pi }_{i}^{{R}_{i}}(s)\) by masking the logits of all actions not in Ri (i.e., setting the weight of all actions not in Ri to zero before applying the final softmax layer). Similarly, we denote by \({\pi }_{j}^{{R}_{j}}\), or \({\pi }_{j}^{D}\), the policy for pj which respects the contract’s restriction Rj.

Estimating values using a sample of simulations

A key building block in our methods is to simulate what might occur in the next turn when players follow various policies, such as the unconstrained policy πi or a constrained policy \({\pi }_{i}^{{R}_{i}}\). Let a be a vector of agent actions \({{{{{{{\bf{a}}}}}}}}={({a}_{i})}_{i=1}^{n}\), and let T(s,a) be the transition function of the game, taking the current state s and the action profile a and returning the resulting next game state. Finally, let \({{{{{{{\bf{V}}}}}}}}(s)={({V}_{i}(s))}_{i=1}^{n}\) be a state value function, denoting the expected reward (or probability of winning) of the players in a given state. We can combine these to obtain a joint action value function: Q(s,a) = V(T(s,a)).

Consider a player pi trying to select an action in a state s and playing with agents P−i = {p1,…,pi−1,pi+1,…,pn} who follow the respective policies π−i = (π1,…,πi−1,πi+1,…,πn). Each policy in π−i may be for instance the unconstrained policy π or alternatively πj may be a policy \({\pi }_{j}^{{R}_{j}}\) that is constrained by some contract D = (Rj,Rk) that agent pj had agreed with some agent pk. By a−i ~ π−i we denote sampling actions for the players P−i from these respective policies π−i. We denote the full action profile combining the action ai for pi and the actions a−i for the players P−i as (ai,a−i). By applying the joint action value function Q(s,(ai,a−i)) we arrive at an estimate of each player’s value if pi takes the action ai; we can consider this as a conditional action value function \({{{{{{{{\bf{Q}}}}}}}}}^{{{{{{{{{\bf{a}}}}}}}}}_{-i}}(s,{a}_{i})={{{{{{{\bf{Q}}}}}}}}(s,({a}_{i},{{{{{{{{\bf{a}}}}}}}}}_{-i}))\).

We can also extend this to two players: we denote the action profile combining ai for pi and aj for pj as (ai,aj,a−{i, j}), and the resulting pairwise conditional action value function as \({{{{{{{{\bf{Q}}}}}}}}}^{{{{{{{{{\bf{a}}}}}}}}}_{-\{i,j\}}}(s,({a}_{i},{a}_{j}))={{{{{{{\bf{Q}}}}}}}}(s,({a}_{i},{a}_{j},{{{{{{{{\bf{a}}}}}}}}}_{-\{i,j\}}))={{{{{{{\bf{V}}}}}}}}(T(s,({a}_{i},{a}_{j},{{{{{{{{\bf{a}}}}}}}}}_{-\{i,j\}})))\).

Given a value function Vi, we can estimate pi’s value for taking an action ai as the expected value pi in the next game state, with the expectation taken over the actions of the other agents sampled from their respective policies π−i:

Simulation action value estimation (SAVE)

Consider sampling a list of M partial action profiles \({{{{{{{{\bf{a}}}}}}}}}_{-i}^{1},\ldots,{{{{{{{{\bf{a}}}}}}}}}_{-i}^{M} \sim {\pi }_{-i}\). We denote this sample as \({{{{{{{{\bf{A}}}}}}}}}_{-i}=({{{{{{{{\bf{a}}}}}}}}}_{-i}^{1},\ldots,{{{{{{{{\bf{a}}}}}}}}}_{-i}^{M})\) (where each individual element \({{{{{{{{\bf{a}}}}}}}}}_{-i}^{m}\) is a partial action profile that consists of actions for all players except pi). We use the sample A−i to get a Monte-Carlo estimate for agent pi’s expected value when taking action ai, by calculating \({\hat{Q}}_{i}^{{{{{{{{{\bf{A}}}}}}}}}_{-i}}(s,{a}_{i})=\frac{1}{M}\mathop{\sum }\nolimits_{m=1}^{M}{Q}_{i}^{{{{{{{{{\bf{a}}}}}}}}}_{-i}^{j}}(s,{a}_{i})\). We refer to this as Simulation Action Value Estimation (SAVE). We may also estimate a different player pj’s value Vj(T(s,(ai,a−i))) when pi takes an action ai in a similar way. We use the notation \({\hat{Q}}_{j}^{{{{{{{{{\bf{A}}}}}}}}}_{-i}}(s,{a}_{i})\). The same is true for the STAVE method which is introduced later.

Simulation value estimation (SVE)

Consider a player pi considering a contract D = (Ri,Rj). When pi, pj select actions under this contract they use the respective policies \({\pi }_{i}^{D}={\pi }_{i}^{{R}_{i}}\) and \({\pi }_{j}^{D}={\pi }_{j}^{{R}_{j}}\). Assuming all other agents follow the unrestricted policy π, we consider the policy profile \({\pi }^{D}=({\pi }_{1}^{D},\ldots,{\pi }_{n}^{D})\) where \({\pi }_{i}^{D}={\pi }_{i}^{{R}_{i}}\) and \({\pi }_{j}^{D}={\pi }_{j}^{{R}_{j}}\), and for any k ∉ {i,j} we have \({\pi }_{k}^{D}={\pi }_{k}\). Under these assumptions, we can estimate pi’s value by taking the expected value in the next game state, with the expectation taken over all agents’ actions when sampled from their respective policies in πD:

We can obtain a Monte-Carlo estimate of the agents’ values. We take a sample A of full action profiles a1,…,aM ~ πD (where each am is a full action profile, consisting of actions for all the agents). Averaging over these, we obtain the value estimate \({\hat{V}}_{i}^{{{{{{{{\bf{A}}}}}}}}}(s)=\frac{1}{M}\mathop{\sum }\nolimits_{m=1}^{M}{Q}_{i}(s,{{{{{{{{\bf{a}}}}}}}}}^{m})\). We refer to this method as Simulation value estimation (SVE).

Simulation two-action value estimation (STAVE)

We use a similar calculation for the Propose-Choose protocol, when considering the combined effect of an action ai for player pi and an action aj for player pj, for example in order to evaluate a contract D = ({ai},{aj}). In such situations, we may evaluate pi’s value by taking their expected value in the next game state, over the actions of the other agents as sampled from their respective policies π−{i, j}. This yields the following value:

This expectation is taken over many actions, so we approximate this using a Monte-Carlo simulation. We consider a sample A−{i, j} consisting of actions for all players except {pi,pj}: \({{{{{{{{\bf{A}}}}}}}}}_{-\{i,j\}}=({{{{{{{{\bf{a}}}}}}}}}_{-\{i,j\}}^{1},{{{{{{{{\bf{a}}}}}}}}}_{-\{i,j\}}^{2},\ldots,{{{{{{{{\bf{a}}}}}}}}}_{-\{i,j\}}^{M})\) where each element \({{{{{{{{\bf{a}}}}}}}}}_{-\{i,j\}}^{m}\) contains the actions of all players except pi, pj. Given an action ai for agent pi and an action aj for pj, each such element \({{{{{{{{\bf{a}}}}}}}}}_{-\{i,j\}}^{m}\) can be completed into a full action profile \(({a}_{i},{a}_{j},{{{{{{{{\bf{a}}}}}}}}}_{-\{i,j\}}^{m})\), and used to evaluate the next state value. Average over the sample we get: \({\hat{Q}}_{i}^{{{{{{{{\bf{A}}}}}}}}}(s,({a}_{i},{a}_{j}))=\frac{1}{M}\mathop{\sum }\nolimits_{m=1}^{M}{Q}_{i}^{{{{{{{{{\bf{a}}}}}}}}}_{-\{i,j\}}^{m}}(s,({a}_{i},{a}_{j}))\). We call this method Simulation two-action value estimation (STAVE).

The sampled best responses procedure

A recent method for constructing No-Press Diplomacy agents starts with a policy which mimics human gameplay (imitation learning), then gradually improves this policy using a neural policy iteration process39. In the “Negotiators outperform non-communicating agents” section, we use agents produced under this approach as our baseline non-communicating agents. The neural policy iteration works by taking a current behavior policy network π, and applying an improvement operator aimed at generating actions that are better than those in this underlying policy. Many game trajectories are then generated using this improved policy. Given these games a new policy neural network \(\pi ^{\prime}\) is distilled via supervised learning. The process is repeated, resulting in stronger and stronger policies.

We briefly describe the improvement operator used for No-Press Diplomacy39, called Sampled Best Response (SBR for short), as we use it as a building block for our negotiating agents (see the recent paper39 for further details about SBR and using it to derive Diplomacy RL agents). SBR selects the action of player i in state s as follows. SBR first samples many candidate actions \({\{{c}^{j}\}}_{j=1}^{C}\) for the target player i from the policy \({\pi }_{i}^{c}\) (the current policy which SBR aims to improve upon). SBR then uses a policy πb to produce the actions of the other agents, either using the same network as πc or a previous generation policy.

To evaluate the quality of cj, SBR simulates the possible behavior of other players by taking a sample A of partial action profiles \({{{{{{{\bf{A}}}}}}}}={{{{{{{{\bf{a}}}}}}}}}_{-i}^{1},\ldots,{{{{{{{{\bf{a}}}}}}}}}_{-i}^{M} \sim {\pi }_{-i}^{b}\), then evaluating the estimated future reward (akin to the SAVE procedure of the section “Simulation building blocks”):

The action maximizing this estimated value under these simulations is returned by SBR. The SBR procedure39 is given in Algorithm 1.

Algorithm 1 Sampled Best Response.

Baseline Negotiator algorithms

In the following sections, we describe the algorithms for the Baseline Negotiator of the section “Negotiating with binding agreements”, including RSS and MBDS. We first describe the action phase, as it is the same for both protocols. If no agreement has been reached during the negotiation phase in state s, the Baseline Negotiator pi is free to choose any legal action from its unconstrained policy \({\pi }_{i}^{c}\), and has no constraining information about other players’ moves. It thus selects an action by applying the Sampled Best Response (SBR) method described in Algorithm 1, selecting the action ai = SBR\((s,i,{\pi }_{-i}^{b},{\pi }_{i}^{c},{V}_{i},M,N)\).

If a contract Di,j = (Ri,Rj) was agreed, it places a restriction Ri that the Baseline Negotiator respects. If Ri contains only a single action that pi may take under the contract, the Baseline Negotiator simply selects that action. If multiple actions are allowed under Ri, the Baseline Negotiator selects an action by applying SBR on a restricted policy \({\pi }^{{R}_{i}}\) and assuming that pj would also respect the agreement and select actions from the restricted policy \({\pi }^{{R}_{j}}\). If there are multiple agreed contracts \({D}_{i,{j}_{1}}=({R}_{i}^{{j}_{1}},{R}_{{j}_{1}}),\ldots,{D}_{i,{j}_{k}}=({R}_{i}^{{j}_{k}},{R}_{{j}_{k}})\) (as permitted by the Mutual Proposal protocol) then \({R}_{i}={R}_{i}^{{j}_{1}}\cap \cdots \cap {R}_{i}^{{j}_{k}}\) is the intersection of the constraints. The negotiator then selects an action ai = SBR\((s,i,({\pi }_{{j}_{1}}^{{R}_{{j}_{1}},b},\ldots,{\pi }_{{j}_{k}}^{{R}_{{j}_{k}},b},{\pi }_{-\{i,{j}_{1},\ldots,{j}_{k}\}}^{b}),{\pi }_{i}^{{R}_{i},c},{V}_{i},M,N)\).

Restriction simulation sampling (RSS)

Our negotiation algorithm for the Mutual Proposal protocol, called RSS, is based on applying SVE to contrast what might occur when a contract is agreed and when it is not agreed.

We consider the case where there is a single contract Di,j = (Ri,Rj) an agent may propose to another, such as a Peace contract in Diplomacy. Under the Mutual Proposal protocol an agent pi must decide whether to extend an offer to each of the other agents. When deciding whether agent pi would make a proposal Di,j to agent pj, RSS uses SVE to estimate the expected value to pi in two cases: (1) assuming that pi does not reach an agreement with pj, and (2) assuming pi and pj agree on the contract Di,j = (Ri,Rj). The full RSS method, given in Algorithm 2, compares agent utilities between these cases.

Algorithm 2 Restriction Simulation Sampling.

For the first case, where no agreement is reached, both pi and pj are free to select any action, so we use the unrestricted policies πi and πj for pi and pj. RSS makes the simplifying assumption that no other agent would reach any agreements (i.e no agent in the set P⧹{pi,pj} would reach an agreement with another agent), so we also use the unrestricted policies π for all the other agents. Hence, the case of not having an agreement is evaluated by applying SVE with the policy profile π, so actions for all players p1, …, pn are sampled from their respective unconstrained policies π1, …, πn.

In the second case, where an agreement Di,j = (Ri,Rj) is reached between pi, pj, both pi, pj may only choose actions in the respective action sets Ri, Rj, so we use the restricted policies \({\pi }_{i}^{{R}_{i}},{\pi }_{j}^{{R}_{j}}\) for pi and pj respectively. Similarly to the no agreement case, we make the simplifying assumption that no further contracts between other agents have been agreed, so we can reuse the actions for the other players P⧹{i,j} as used in the SVE procedure for the no-agreement.

To estimate values in the case of reaching an agreement we also require samples from \({\pi }_{i}^{{R}_{i}}\) and \({\pi }_{j}^{{R}_{j}}\) for pi and pj; to get these, we reuse the constraint-satisfying actions from the no-agreement SVE, resampling only those actions which fall outside of Ri and Rj. For instance, for a Peace contract, the resampling for pi, pj is done by rejecting actions that violate the peace. We do so to reduce the variance in the estimate of the difference between values in the agreement and non-agreement case.

We denote by \({\hat{V}}_{i}^{{{{{{{{\bf{B}}}}}}}}}(s)\) the SVE value estimate for pi in the case of no agreement, and by \({\hat{V}}_{i}^{{{{{{{{\bf{B}}}}}}}}^{\prime} }(s)\) the SVE estimate for pi in the case of an agreement D = (Ri,Rj). If \({\hat{V}}_{i}^{{{{{{{{\bf{B}}}}}}}}^{\prime} }(s) > {\hat{V}}_{i}^{{{{{{{{\bf{B}}}}}}}}}(s)\) then pi expects to achieve more utility when the contract is agreed, so they propose it to pj, and otherwise they refrain from proposing it.

Mutually beneficial deal sampling (MBDS)

Our negotiation algorithm for the Propose-Choose protocol is MBDS. It decides which contracts to propose during the Propose phase, and which contracts to choose during the Choose phase. It generates and selects contracts seeking mutually beneficial deals. In order to choose between possible contracts, we used the Nash Bargaining Solution (NBS) from game theory52,53. Given a space \(S\subseteq {{\mathbb{R}}}^{2}\) of feasible agreements (with a point s = (d1,d2) in S yielding respective utilities d1,d2), and a disagreement outcome \({d}^{0}=({d}_{1}^{0},{d}_{2}^{0})\) (with respective utilities \({d}_{1}^{0},{d}_{2}^{0}\)), the NBS is the feasible agreement maximizing the product of utilities over the disagreement baseline: \({{{{{{{\mathrm{argmax}}}}}}}\,}_{({d}_{1},{d}_{2})\in S}{({d}_{1}-{d}_{1}^{0})}^{+}{({d}_{2}-{d}_{2}^{0})}^{+}\).

NBS is the only bargaining solution concept that satisfies certain fair bargaining axioms, producing a negotiation outcome that’s mutually advantageous relative to the no-agreement outcome52.

NBS guarantees Pareto optimality, meaning negotiators never select a contract if they can find an alternative contract that guarantees both sides higher utility than the chosen agreement, independence of units of measure, meaning switching to an equivalent utility representation other than winrates does not result in a change in the chosen contracts, and symmetry, meaning that if the space of possible contracts is symmetric in the winrates it allows, then the chosen agreement would yield both sides the same winrate.

These properties of the NBS are important for our Diplomacy agents, as they guarantee that agreements are chosen so as to maximize the win probabilities of both sides and provide robustness to our choice of measuring utility in terms of winrate improvements. Further, these properties mean that negotiators attempt to choose balanced contracts that both sides may agree on, rather than choosing contracts that prefer one side over the other, which would make it more difficult to agree.

Approximating the Nash Bargaining Solution (NBS): When applying NBS to the Propose-Choose setting, one reasonable interpretation is to consider the disagreement utility as the win probability of the sides assuming no contract is agreed (with both sides selecting actions from their unconstrained policy), and to consider the utility under a contract as the win probabilities of the sides assuming the contract is signed (with both sides selecting actions from the constrained policies under the contract).

However, Diplomacy has more than two agents and combinatorially large action spaces, and agent policies are stochastic. Diplomacy has an enormous action space of 1021 to 1064 legal actions per turn39, and the space of possible contracts grows quadratically in the size of the legal actions set (any combination of a legal action for one side and a legal action for the other side makes a possible contract). Hence, it is intractable to exhaustively search through the space of contracts, or to exactly compute expected win probabilities under a contract. In addition, the interpretation of disagreement utility as assuming no contract is signed by either side is flawed: either side may instead sign a contract with a different peer. We overcome these difficulties using a sampling approach, and by simulating the negotiation process of other agents.

During the propose phase, MBDS generates multiple candidate contracts which agent pi could offer to pj. The candidate contracts \({{{{{{{{\mathcal{D}}}}}}}}}_{i,j}\) are created by generating sets of candidate actions Ci for pi and Cj for pj, and looking at the Cartesian product Ci × Cj = {(ci,cj): ci ∈ Ci, cj ∈ Cj} of these actions, i.e., all possible combinations of the candidate actions for the two sides. The candidate actions for the sides are generated by sampling many actions \({c}_{i}^{1},\ldots,{c}_{1}^{N}\) and \({c}_{j}^{1},\ldots,{c}_{j}^{N}\) from policies \({\pi }_{i}^{c}\) and \({\pi }_{j}^{c}\), and selecting the top K ranked by a metric that combines both pi’s and pj’s expected utility under the actions (making the simplifying assumption that all remaining agents simply select an action using the unrestricted policy profile \({\pi }_{-\{i,j\}}^{b}\)). This unrestricted policy is represented using a list of samples b1,…,bM ~ πb.

We consider these action profiles B = (b1,…,bM), and an additional scaling factor \(\frac{{q}_{i,j}^{0}}{{q}_{j,i}^{0}}\). Given these, the combined metric for an action ci is a weighted sum of pi’s utility and pj’s utility: \({\hat{Q}}_{i}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,{c}_{i})+\beta \frac{{q}_{i,j}^{0}}{{q}_{j,i}^{0}}{\hat{Q}}_{j}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,{c}_{i})\). The parameter 0 < β < 1 reflects the degree of emphasis on the utility of the partner to whom the contract is offered; low values of β reflect emphasizing actions that lead to a high utility for the proposer, and high values of β emphasize actions that lead to a high utility for the proposee.

The scaling factor \(\frac{{q}_{i,j}^{0}}{{q}_{j,i}^{0}}\) adjusts the utilities to be on the same scale, facilitating deal-making between players with high versus low estimated values. It is computed by sampling a set B of M action profiles for all players from πb, and using SAVE to select selfishly-best actions \({c}_{i}^{*}\) from among \({c}_{i}^{1},\ldots,{c}_{i}^{N}\) and \({c}_{j}^{*}\) from among \({c}_{j}^{1},\ldots,{c}_{j}^{N}\). \({c}_{i}^{*}\) and \({c}_{j}^{*}\) are then combined using STAVE to estimate pi’s and pj’s values in the case where no deal is made: \({q}_{i,j}^{0}={\hat{Q}}_{i}^{{\bar{{{{{{{{\bf{b}}}}}}}}}}_{-\{i,j\}}}(s,({c}_{i},{c}_{j}))\) and similarly for \({q}_{j,i}^{0}\).

When deciding about which of the contracts in \({{{{{{{{\mathcal{D}}}}}}}}}_{i,j}\) to propose to pj, the proposer pi must consider and balance two factors relating to the resulting agent utilities (winrates): (1) the value that pi stands to gain should the contract be agreed on, and (2) the likelihood of pj agreeing to the contract, which is determined by the value pj stands to gain should the contract be agreed.

We combine these two factors by using the Nash Bargaining Solution52, which reflects reasonable bargaining axioms69. We compute the Nash Bargaining Score in Algorithm 3, which relies on qi, qj which are used as estimates of the no-deal baseline values that the gains are relative to. The values qi, qj indicate what values pi and pj may expect to obtain in the absence of a deal between them.

Algorithm 3 Nash Bargaining Score.

When there is no agreement, both pi and pj are free to choose any legal action. Hence, we assume they would choose the best actions \({c}_{i}^{*}\) and \({c}_{j}^{*}\) that they can from among samples from the policy πc. As in SBR, we take the simplifying assumption that that the remaining agents P⧹{pi,pj} would not form agreements amongst themselves, and hence would simply choose actions from their policy πb. Seeking to estimate the next state values for this case, we use the STAVE algorithm described earlier, defining \({q}_{i,j}^{0}={\hat{Q}}_{i}^{{{{{{{{{\bf{B}}}}}}}}}_{-\{i,j\}}}(s,({c}_{i},{c}_{j}))\) and \({q}_{j,i}^{0}={\hat{Q}}_{j}^{{{{{{{{{\bf{B}}}}}}}}}_{-\{i,j\}}}(s,({c}_{i},{c}_{j}))\). We refer to these values as the initial no-deal value estimates.

The initial no-deal estimates may not fully represent the values pi and pj can reach without a deal between them, as either side may be able to do better by making a deal with another player. To estimate this we use an iterative approach akin to other iterative negotiation methods70 and described in Algorithm 4.

Algorithm 4 BATNA Update by Internal Dynamic Bargaining Simulation.

Algorithm 4 simulates an iterative process of updating beliefs regarding the contracts that might be agreed between all agents, and the resulting estimates of the values all agents might obtain. In each iteration, we examine each pair of players, and assume the deal they would select is the one with the highest Nash Bargaining Score over the current baseline estimates. Further, the update assumes that when each player pi considers their action with regard to each partner pj, it contrasts its no-deal baseline with pj with the best alternative deal from among the remaining players P⧹{pi,pj}. We apply a damping factor κ to avoid oscillations in partner choice. Using the updated baselines, MBDS proposes to each partner the deal with the highest score. The full procedure for the Proposal phase is given in Algorithm 5.

Algorithm 5 Mutually Beneficial Deal Sampling Proposal.

MBDS applies the same ranking during the Choose phase. In this phase, each agent must choose from amongst the contracts that are On The Table for them, selecting a single partner to agree a contract with. MDBS for a target agent pi examines the set of contracts involving pi, and computes the Nash Bargaining score for each one using the baseline values from the Proposal phase. If some Nash Bargaining scores are positive, it considers the partners with whom such deals are possible, selecting the partner with whom the highest-score deal is most favorable to itself. If not, it simply selects the deal most favorable to itself from among those that are estimated to be preferable to the no-deal baseline with their respective counterpart.

Lastly, for the chosen deal, MBDS evaluates and indicates whether both deals with the chosen counterpart would be acceptable, by comparing their estimated values with the no-deal BATNA. The full MBDS method for the Choose phase is given in algorithm 6.

Algorithm 6 Mutually Beneficial Deal Sampling Choice.

Deviator Agent algorithms

Deviators are agents who may deviate from deals they have agreed to. In the following sections, we fully describe the Simple Deviators and Conditional Deviators of the section “Negotiating with non-binding agreements”. Simple Deviators enter into deals in a way that is identical to the Baseline Negotiators, but even when a deal is agreed on they select their action directly from the unconstrained SBR policy, as if no deal was agreed. Conditional Deviators also enter into deals identically to Baseline Negotiators, but when selecting their action in turns when a deal has been reached, they attempt to maximize their reward under the assumption that the other side would honor the deal.

Simple Deviators

During the negotiation phase, Simple Deviators behave identically to the Baseline Negotiator. Simple Deviators only behave differently to Baseline Negotiators during the action phase.

During the action phase in state s, if no deal has been reached for a Baseline Negotiator pi, this Baseline Negotiator selects an action ai = SBR\((s,i,{\pi }_{-i}^{b},{\pi }_{i}^{c},{V}_{i},M,N)\) using the unconstrained policy \({\pi }_{i}^{c}\). However, if the Baseline Negotiator pi agreed to deals \({D}_{i,j}=({R}_{i}^{j},{R}_{j})\) with players j1,…,jk then the Baseline Negotiator pi selects an action ai = SBR\((s,i,({\pi }_{{j}_{1}}^{{R}_{{j}_{1}},b},\ldots,{\pi }_{{j}_{k}}^{{R}_{{j}_{k}},b},{\pi }_{-\{i,{j}_{1},\ldots,{j}_{k}\}}^{b}),{\pi }_{i}^{{R}_{i}^{{j}_{1}}\cap \cdots \cap {R}_{i}^{{j}_{k}},c},{V}_{i},M,N)\) using the constrained policies \({\pi }^{{R}_{i}^{{j}_{1}}\cap \cdots \cap {R}_{i}^{{j}_{k}},c}\) and \({\pi }_{j}^{{R}_{j},b}\).

In contrast to a Baseline Negotiator, a Simple Deviator pi always selects an action from the unconstrained policy \({\pi }_{i}^{c}\), regardless of whether a deal has been agreed or not (i.e., it always selects an action ai = SBR\((s,i,{\pi }_{-i}^{b},{\pi }_{i}^{c},{V}_{i},M,N)\)). Colloquially speaking, we say that Simple Deviators forget that they have signed deals with others—even when a Simple Deviator pi has agreed on a deal D = (Ri,Rj) with another player pj, during the action phase it still chooses an action which does not necessarily respect the constraint Ri, and doesn’t assume that pj will respect the constraint Rj.

Conditional Deviators

Similarly to the Simple Deviators, during the negotiation phase the behavior of a Conditional Deviator is identical to that of a Baseline Negotiator. Conditional Deviators only behave differently to Baseline Negotiators during the action phase.

When no deal has been agreed, a Conditional Deviator pi selects an action in the same way as the Baseline Negotiator, i.e., using the SBR logic: sampling a set B−i of M partial action profiles, sampling N candidate actions from the unrestricted policy \({\pi }_{i}^{c}\), and choosing the one yielding the maximal value on the sample, i.e., \({{{{{{{\mathrm{argmax}}}}}}}\,}_{c\in \{{c}^{1},\ldots,{c}^{N}\}}{\hat{Q}}_{i}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,c)\). However, when a deal D = (Ri,Rj) has been agreed between a Conditional Deviator pi and some other player pj, the Conditional Deviator attempts to maximize its gain under the assumption that the other side would respect their end of the deal; again we use a sample B−i of M partial action profiles, but in this sample we select the action for any deal partner pj from the restricted policy \({\pi }_{j}^{{R}_{j},b}\), reflecting the assumption that the peer pj would behave according to the restriction of the contract. We consider the top candidate action sampled from the unconstrained policy \({c}^{*}={{{{{{{\mathrm{argmax}}}}}}}\,}_{c\in \{{c}^{1},\ldots,{c}^{N}\}}{\hat{Q}}_{i}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,c)\). We then consider actions which do not violate any contract pi has agreed to, by taking a sample of candidate actions \(c{^{\prime} }^{1},\ldots,c{^{\prime} }^{N}\) which are sampled from the policy \({\pi }_{i}^{{R}_{i}^{1}\cap \cdots \cap {R}_{i}^{n},c}\) that respects all the contracts that pi had agreed to. The top ranked action that does not violate pi’s agreements is \(c{^{\prime} }^{*}={{{{{{{\mathrm{argmax}}}}}}}\,}_{c^{\prime} \in \{c{^{\prime} }^{1},\ldots,c{^{\prime} }^{N}\}}{\hat{Q}}_{i}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,c^{\prime} )\). If the estimated value of c* exceeds that of \(c{^{\prime} }^{*}\), the Conditional Deviator expects to gain by breaking its agreements and it chooses the contract-violating action c*; otherwise, when a deviation is not deemed profitable, it selects the top contract-respecting action \(c{^{\prime} }^{*}\). This process is given in Algorithm 7.

Algorithm 7 Conditional Deviator Action Selection for pi.

Learned Deviator

We describe our approach for training a deviator agent that learns the optimal state in which to deviate from a contract when playing a population of other agents (in our empirical analysis these peers are the Sanctioning agents of the section “Mitigating the deviation problem: defensive agents”). Consider that the defensive agents of the section “Mitigating the deviation problem: defensive agents” modify their behavior following any deviation from a previous agreement, and starting from that point they persist with their negative behavior towards the deviator for the remainder of the game; if pi has deviated from a deal with pj at a certain point in a game, pj will never again reach an agreement with pi in that game. Thus, a deviator agent pi playing against a defensive agent pj only has a single opportunity to deviate from an agreement with them in a game. Hence, it makes sense to pose the issue of optimizing the behavior of a deviator pi as identifying the point in the game where it is best to deviate from an agreement with some defensive agent pj. We simplify the problem further by having the Learned Deviators consider only two features of the current state s and contract D = (Ri,Rj) agreed with a peer pj. The first is the approximate immediate deviation gain \(\phi={\hat{Q}}_{i}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,{c}^{*})-{\hat{Q}}_{i}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,c{^{\prime} }^{*})\), as defined on line 15 of Algorithm 7, reflecting the immediate improvement in value pi can gain by deviating from D. The second is the strength of the other agents that pi may turn against itself should pi deviate from a contract. In Diplomacy, the win probability of a player pi in a state s is closely correlated with the number of units (or supply centers) that this player has, and how well positioned they are to attack other players. These characteristics of the state are also closely correlated with the ability of that player to retaliate against an attack from another player. In other words, when a player pi considers deviating from an agreement with player pj, they should be more worried regarding player pj’s retaliation when pj has a high win probability (equivalently a high value) in the resulting state. Thus, we derive a measure ψ from two considerations. One is that if none of the partners with whom a contract has been made is strong, pi may more safely deviate. The other is that if one of the partners is very dominant among the non-pi players, then that partner may be mostly incentivized to reduce pi’s value to begin with, and so pi doesn’t have as much to lose by triggering its retaliation. Thus, with c*, s, B, and Di,j as defined in Algorithm 7, we define \(\psi={{{{{\rm{min}}}}}} ({\hat{Q}}_{{j}^{*}}^{{{{{{\bf{B}}}}}}_{-i}}(s,{c}^{*}),1-{\hat{Q}}_{i}^{{{{{{\bf{B}}}}}}_{-i}}(s,{c}^{*})-{\hat{Q}}_{{j}^{*}}^{{{{{{\bf{B}}}}}}_{-i}}(s,{c}^{*}))\), where \({j}^{*}={{{{{{{\mathrm{argmax}}}}}}}\,}_{j:{D}_{i,j}\ne {{\emptyset}}}{\hat{Q}}_{j}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,{c}^{*})\).

Finally, we define a Deviator agent pi parameterized by two thresholds tϕ, tψ. It follows the Baseline Negotiator behavior (as though ϕ < 0 in Algorithm 7) until the first turn where ϕ > tϕ and ψ < tψ. Then, the agent switches its behavior to that of the Conditional Deviator. In other words, this Deviator waits until a turn where the immediate gains from deviation are high enough and the ability of the other agent to retaliate is low enough, and only then deviates from an agreement. In our experiments the thresholds tϕ, tψ are tuned by applying a simple grid search over the space of parameters (see Fig. 8), though many other approaches could be used (such as Gradient Ascent or Simulated Annealing).

Defensive agent algorithms

Defensive agents are designed to deter others from deviating from agreements, while still retaining the advantage negotiation skills afford over non-communicating agents; they shun Deviators, or work as a group to repel them by negatively responding to deviations so as to reduce the gains from such deviations. Defensive agents act identically to the Baseline Negotiator agents described in detail in the “Baseline Negotiator algorithms” section, but change their behavior when another agent deviates from an agreement made with them. When a defensive agent pi accepts a deal D = (Ri,Rj) with a counterpart agent pj, it examines the behavior of the counterpart pj in the following action phase. If pj deviates from the agreement by taking a disallowed action aj ∉ Rj, it changes its behavior towards this deviator for the remainder of the game. We now fully describe the Binary Negotiators and Sanctioning agents discussed in the section “Negotiating with non-binding agreements”.

Binary Negotiators respond to a deviation of pj by ceasing all communication with pj for the remainder of the game. In the Mutual Proposal protocol this means never proposing any contract to pj and hence never agreeing on any contract with pj for the remainder of the game, and in the Propose-Choose protocol this means never choosing any contract proposed by or to pj, hence never agreeing a contract with pj for the remainder of the game. This is done via an additional check on lines 8 and 17 of Algorithm 6.

A Sanctioning agent pi takes a more active role when responding to a deviation by player pj. Following such a deviation, the Sanctioning agent pi modifies its behavioral goal so as to attempt to lower the deviator pj’s reward in the game.

The standard SBR procedure, Algorithm 1 described in the section “The sampled best responses procedure”, estimates the expected value for player pi in the next state when taking action a as \({\hat{Q}}_{i}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,a)\), and selects the actions that maximize this expected reward. Following a deviation by player pj against player pi, we consider the modified score \({\hat{Q}}_{i-\alpha j}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,a)={\hat{Q}}_{i}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,a)-\alpha {\hat{Q}}_{j}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}(s,a)\). This perturbed score represents a combination of the target player pi’s utility and the negative of player pj’s utility, where the parameter α controls the degree of importance placed on lowering player pj’s utility.

A Sanctioning Agent pi selects actions identically to the Baseline Negotiator of the section “Baseline Negotiator algorithms” until a deviation occurs by a peer pj. Following this point and until the end of the game, the Sanctioning Agent turns to selecting the actions maximizing the perturbed score \({\hat{Q}}_{i-\alpha j}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}\) defined above (in the negotiator algorithms, this affects the evaluation in RSS and on lines 5, 7, and 16 of the Choose phase of MBDS, but not in the Proposal phase). In the case where multiple players \({p}_{{j}^{1}},\ldots,{p}_{{j}^{k}}\) have deviated from contracts with pi, the negative utilities are combined to form \({\hat{Q}}_{i-\alpha {j}^{1}-\cdots -\alpha {j}^{k}}^{{{{{{{{{\bf{B}}}}}}}}}_{-i}}\), defined analogously. Thus, following a deviation by player pj, the Sanctioning agent pi optimizes for a perturbed score that represents a combination of maximizing the win probability of pi and minimizing the win probability of the deviator pj.

Data availability

The raw data for producing the figures is provided as Source data. Source data are provided with this paper.

Code availability

The experiments are based on the algorithms contained in the “Methods” section, which are presented in more detail using Python-style code snippets in Supplementary Note 12. These depend on the base No-Press Diplomacy policy and value functions39, which are available at https://github.com/deepmind/diplomacy.

References